Autocomplete Newsletter. Multimodal GPT4 next week?, 'Indirect prompt injection' attacks. Grammarly Go!, OpenAI Crushing competition price wise.

Will 'For Free' be the only option?

GPT-4 is coming next week – and it will be multimodal, says Microsoft Germany.

GPT-4 is coming next week: at an approximately one-hour hybrid information event entitled "AI in Focus - Digital Kickoff" on 9 March 2023, four Microsoft Germany employees presented Large Language Models (LLM) like GPT series as a disruptive force for companies and their Azure-OpenAI offering in detail. The kickoff event took place in the German language; news outlet Heise was present. Rather casually, Andreas Braun, CTO of Microsoft Germany and Lead Data & AI STU, mentioned what he said was the imminent release of GPT-4. The fact that Microsoft is fine-tuning multimodality with OpenAI should no longer have been a secret since the release of Kosmos-1 at the beginning of March.

"We will introduce GPT-4 next week."

"We will introduce GPT-4 next week, there we will have multimodal models that will offer completely different possibilities – for example, videos," Braun said. The CTO called LLM a "game changer" because they teach machines to understand natural language, which then understands statistically what was previously only readable and understandable by humans. In the meantime, the technology has come so far that it basically "works in all languages": You can ask a question in German and get an answer in Italian. With multimodality, Microsoft(-OpenAI) will "make the models comprehensive"”

Lofi Workday. 100% AI-Generated lofi live stream for study and work!

'Indirect prompt injection' attacks could upend chatbots.

ChatGPT's explosive growth has been breathtaking. Barely two months after its introduction last fall, 100 million users had tapped into the AI chatbot's ability to engage in playful banter, argue politics, generate compelling essays, and write poetry.

"In 20 years following the internet space, we cannot recall a faster ramp in a consumer internet app," analysts at UBS investment bank declared earlier this year.

That's good news for programmers, tinkerers, commercial interests, consumers, and members of the general public, all of whom stand to reap immeasurable benefits from enhanced transactions fueled by AI brainpower.

But the bad news is whenever there's an advance in technology, scammers are not far behind.

A new study published on the pre-print server arXiv has found that AI chatbots can be easily hijacked and used to retrieve sensitive user information.

Researchers at Saarland University's CISPA Helmholtz Center for Information Security reported last month that hackers could employ a procedure called indirect prompt injection to insert malevolent components into a user-chatbot exchange surreptitiously.

https://techxplore.com/news/2023-03-indirect-prompt-upend-chatbots.html

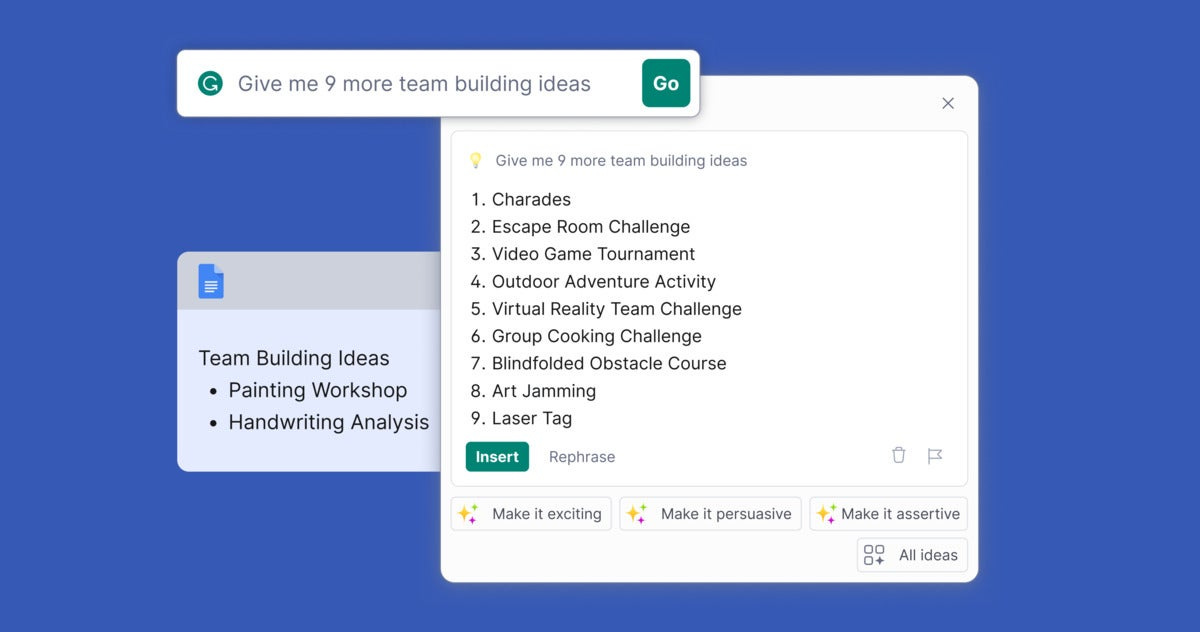

Grammarly Go and the coming wave of generative AI productivity

Grammarly Go is a prompt-based implementation of ChatGPT (though it could use other frameworks in the future). If you are writing a paper or a column like this one, it will ask you a series of questions — and from the resulting answers, it will write the piece faster than you could type it. The questions it asks are about tone and content and what specific data you want to be included, and the result is something that appears to have been custom-written by you.

It won’t capture your personal style well until it learns how you write or until it writes enough content that it becomes the way you write. I expect future versions will better tell context and, from your historical writing, be able to better emulate exactly how you would write a piece.

Has the generative AI pricing collapse already started?

OpenAI is currently the biggest LLM provider, though there is growing competition from Cohere, AI21, Anthropic, Hugging Face, and others. These companies generally sell their output on a “per-token” basis, with a token representing approximately three-quarters of a word.

To give you an example, the baseline price of a very powerful OpenAI model called Davinci is 2 cents for a thousand tokens—which is to say about 750 words. This means it would cost Jasper about 8 cents to get Davinci to write six 500-word blog posts for you.

Prosperity for Jasper lies in pricing plans and subscription models that let the company charge you more than 8 cents for those posts. Prosperity for you lies in getting more value from those posts in the form of clicks, customer leads, sales, and donations than you paid Jasper to pay Davinci to write them. For good or ill, this dynamic will plainly lead to a tsunami of new “content” flooding the Internet in very short order.

https://arstechnica.com/gadgets/2023/03/has-the-generative-ai-pricing-collapse-already-started/